Last few weeks I was busy implementing CGL Map Reduce a streaming based map reduce implementation that uses a content dissemination network for all its communication. Our main objective behind this implementation is to avoid the overhead imposed by the technique adopted by both Google and Hadoop in their map-reduce implementations, that is communicate data via files.

Instead of communicating the data between the map and reduce tasks via files, we use NaradaBrokering's publish/subscribe messaging for the data transfer. In most of the map-reduce use cases the output of the map task is significantly smaller than the size of the input data. In addition, the use of a file system based data communication mechanism is prohibitively slow for Iterative map-reduce tasks such as clustering algorithms. These observations motivates us in implementing the CGL Map Reduce.

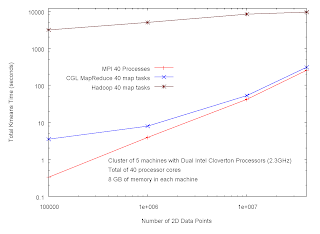

Following two graphs compares the performance of CGL MapReduce with other parallization techniques used for SPMD programs. For this benchmark we use kmeans algorithm to cluster a collection of 2D data points.

First we compare the performance of CGL MapReduce with Hadoop and MPI. We increase the size of the data set from 100000 points to 40 million and measured the total time for the computation under different implementations. MPI program was the fastest of the three while CGL MapReduce shows very close performance for large data sets. However, Hadoop's timing is almost 30 times longer than the CGL MapReduce and the MPI.

Following two graphs compares the performance of CGL MapReduce with other parallization techniques used for SPMD programs. For this benchmark we use kmeans algorithm to cluster a collection of 2D data points.

First we compare the performance of CGL MapReduce with Hadoop and MPI. We increase the size of the data set from 100000 points to 40 million and measured the total time for the computation under different implementations. MPI program was the fastest of the three while CGL MapReduce shows very close performance for large data sets. However, Hadoop's timing is almost 30 times longer than the CGL MapReduce and the MPI.

Figure 1. Performance of CGL MapReduce vs. Hadoop vs. MPI

Figure 1. Performance of CGL MapReduce vs. Hadoop vs. MPINext, we performed the similar computation on a Single multi-core machine to see the effect of various parallelization techniques for this type of computations. Java threads, MPI and CGL MapReduce shows the converging results for large data sizes. In this case the main limitation factor is the memory access and hence the technique with minimum overhead to the to memory wins the battle. In our experiment the MPI performed the fastest and the Java threads performed second while CGL MapReduce is little behind Java threads. Again, Hadoop is about 30 times slower than any of the other programs.

MPI program achieves its performance from its C++ roots. Java threads is faster than CGL MapReduce simply due to the additional overheads in the map reduce implementation. Hadoop's slowness is due to its overhead in creating and retrieving files for the communication.

MPI program achieves its performance from its C++ roots. Java threads is faster than CGL MapReduce simply due to the additional overheads in the map reduce implementation. Hadoop's slowness is due to its overhead in creating and retrieving files for the communication.

More analyses will follow soon.